44 nlnl negative learning for noisy labels

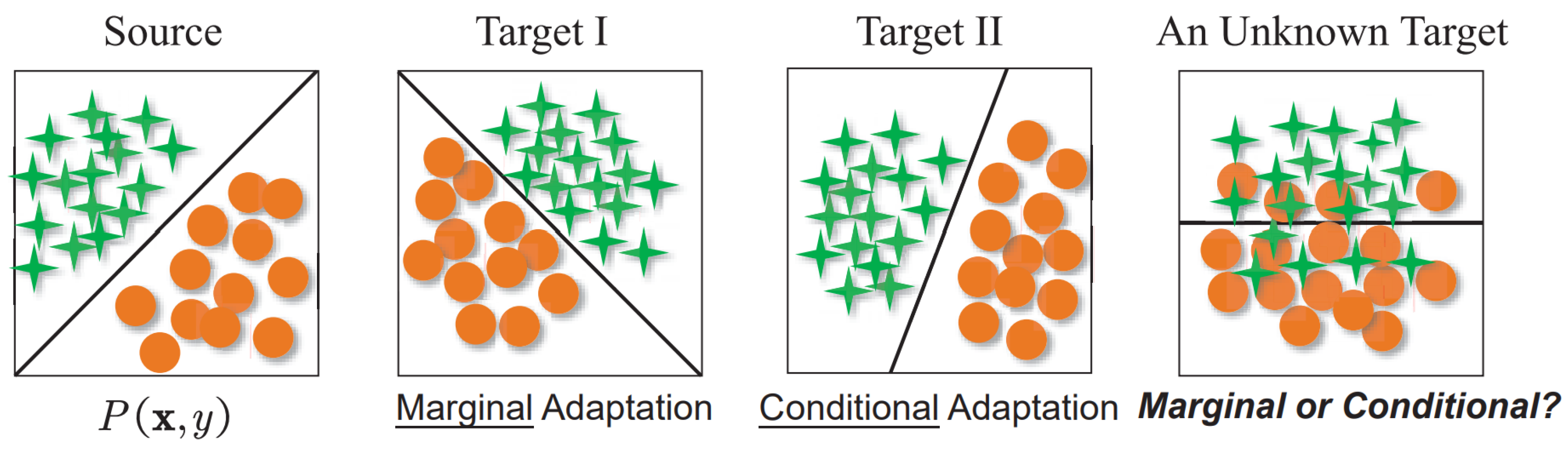

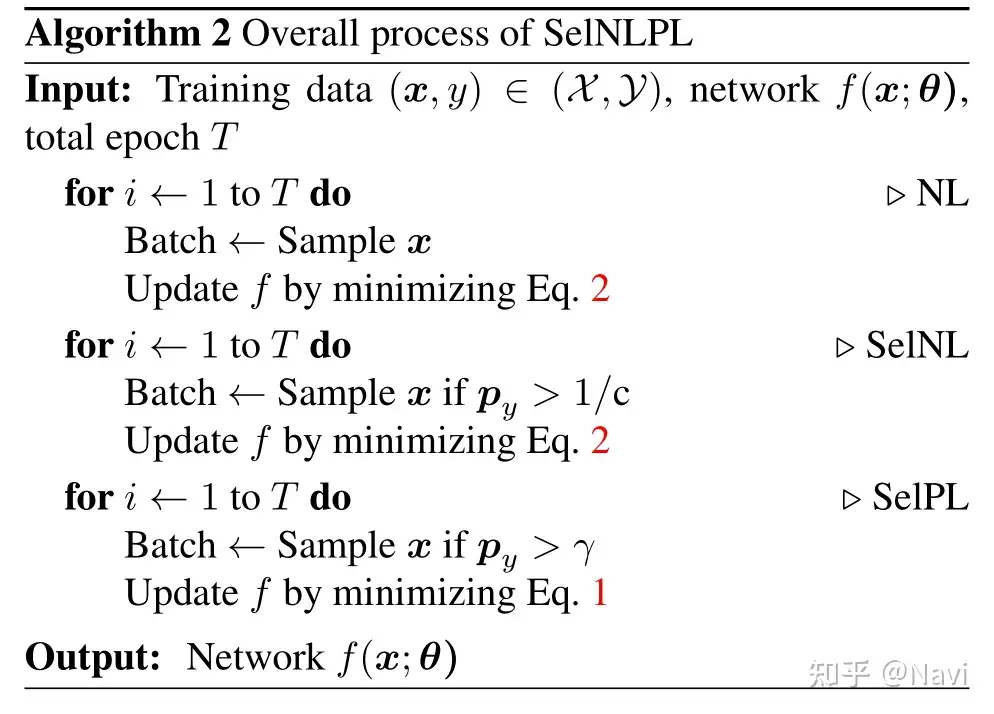

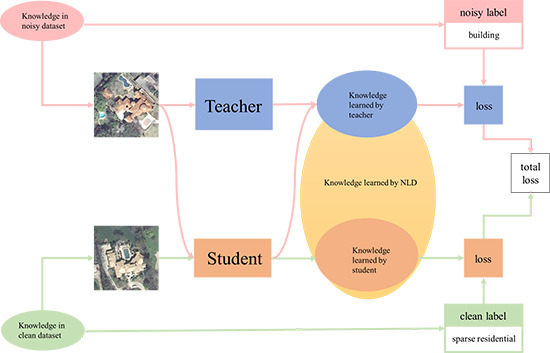

[1908.07387] NLNL: Negative Learning for Noisy Labels However, if inaccurate labels, or noisy labels, exist, training with PL will provide wrong information, thus severely degrading performance. To address this issue, we start with an indirect learning method called Negative Learning (NL), in which the CNNs are trained using a complementary label as in "input image does not belong to this complementary label." NLNL: Negative Learning for Noisy Labels - Semantic Scholar (a): Division of training data into either clean or noisy data with CNN trained with SelNLPL. (b): Training initialized CNN with clean data from (a), then noisy datas label is updated following the output of CNN trained with clean data. (c): Clean data and label-updated noisy data are both used for training initialized CNN in the final step.

NLNL: Negative Learning for Noisy Labels - arXiv However, if inaccurate labels, or noisy labels, exist, train-ing with PL will provide wrong information, thus severely degrading performance. To address this issue, we start with an indirect learning method called Negative Learning (NL), in which the CNNs are trained using a complementary la-bel as in "input image does not belong to this ...

Nlnl negative learning for noisy labels

NLNL Negative Learning For Noisy Labels - Open Source Agenda NLNL-Negative-Learning-for-Noisy-Labels. Pytorch implementation for paper NLNL: Negative Learning for Noisy Labels, ICCV 2019. Paper: . Requirements. python3; pytorch; matplotlib; Generating noisy data python3 noise_generator.py --noise_type val_split_symm_exc Start training. Simply run sh file: run.sh ICCV 2019 Open Access Repository However, if inaccurate labels, or noisy labels, exist, training with PL will provide wrong information, thus severely degrading performance. To address this issue, we start with an indirect learning method called Negative Learning (NL), in which the CNNs are trained using a complementary label as in "input image does not belong to this complementary label." ydkim1293/NLNL-Negative-Learning-for-Noisy-Labels - GitHub Pytorch implementation for paper NLNL: Negative Learning for Noisy Labels, ICCV 2019 Paper: Requirements python3 pytorch matplotlib Generating noisy data python3 noise_generator.py --noise_type val_split_symm_exc Start training Simply run sh file: run.sh

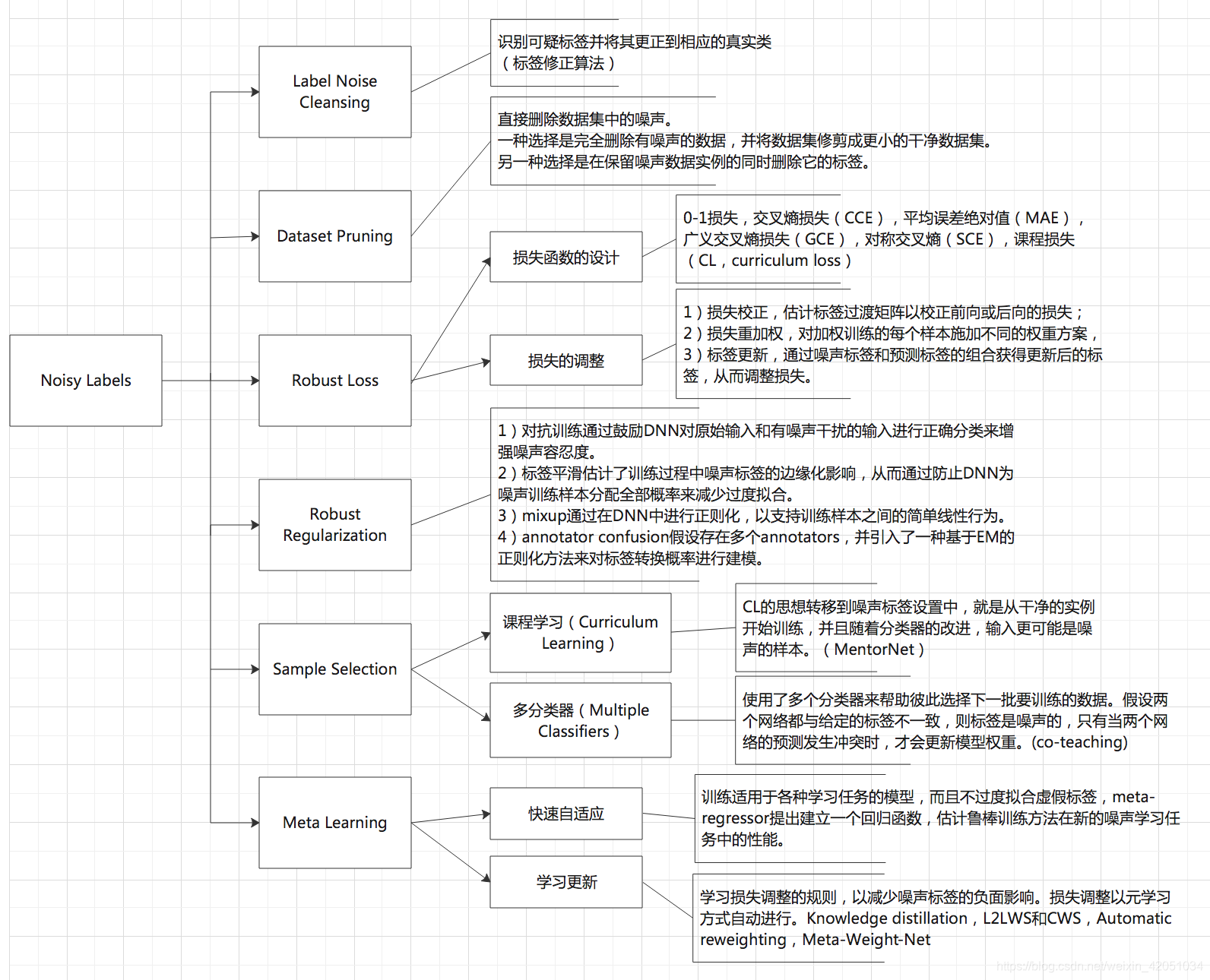

Nlnl negative learning for noisy labels. NLNL: Negative Learning for Noisy Labels | ICCV19-Paper-Review Negative Learning is introduced to resolve the problem of noisy data classification and to save the model from overfitting. In Negative Learning CNNs are ... NLNL: Negative Learning for Noisy Labels - UCF CRCV NLNL: Negative Learning for Noisy Labels. Katalina Biondi. Paper by: Youngdong Kim , Junho Yim, Juseung Yun, Junmo Kim. School of Electrical Engineering, ... PDF NLNL: Negative Learning for Noisy Labels trained directly with a given noisy label; thus overfitting to a noisy label can occur even if the pruning or cleaning pro-cess is performed. Meanwhile, we use NL method, which indirectly uses noisy labels, thereby avoiding the problem of memorizing the noisy label and exhibiting remarkable performance in filtering only noisy samples. Using complementary labels This is not the first time that NLNL: Negative Learning for Noisy Labels | Request PDF Request PDF | On Oct 1, 2019, Youngdong Kim and others published NLNL: Negative Learning for Noisy Labels | Find, read and cite all the research you need on ResearchGate

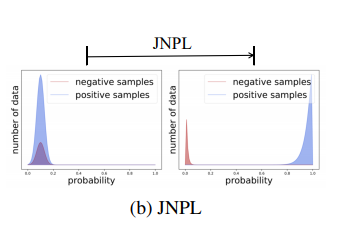

NLNL: Negative Learning for Noisy Labels - Semantic Scholar Figure 1: Conceptual comparison between Positive Learning (PL) and Negative Learning (NL). Regarding noisy data, while PL provides CNN the wrong information (red balloon), with a higher chance, NL can provide CNN the correct information (blue balloon) because a dog is clearly not a bird. NLNL: Negative Learning for Noisy Labels - NASA/ADS Because the chances of selecting a true label as a complementary label are low, NL decreases the risk of providing incorrect information. Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL). [PDF] NLNL: Negative Learning for Noisy Labels - Semantic Scholar Aug 19, 2019 ... This work uses an indirect learning method called Negative Learning (NL), in which the CNNs are trained using a complementary label as in ... NLNL-Negative-Learning-for-Noisy-Labels | NLNL: Negative Learning for ... Implement NLNL-Negative-Learning-for-Noisy-Labels with how-to, Q&A, fixes, code snippets. kandi ratings - Low support, No Bugs, No Vulnerabilities. No License, Build not available.

NLNL: Negative Learning for Noisy Labels - IEEE Xplore NLNL: Negative Learning for Noisy Labels. Youngdong Kim. Junho Yim. Juseung Yun. Junmo Kim. School of Electrical Engineering, KAIST, South Korea. NLNL: Negative Learning for Noisy Labels | Request PDF - ResearchGate To address this issue, we start with an indirect learning method called Negative Learning (NL), in which the CNNs are trained using a complementary label as in "input image does not belong to this ... NLNL: Negative Learning for Noisy Labels - CORE However, if inaccurate labels, or noisy labels, exist, training with PL will provide wrong information, thus severely degrading performance. To address this issue, we start with an indirect learning method called Negative Learning (NL), in which the CNNs are trained using a complementary label as in "input image does not belong to this complementary label." NLNL: Negative Learning for Noisy Labels - IEEE Computer Society Convolutional Neural Networks (CNNs) provide excellent performance when used for image classification. The classical method of training CNNs is by labeling images in a supervised manner as in

NLNL: Negative Learning for Noisy Labels | Papers With Code NLNL: Negative Learning for Noisy Labels. Convolutional Neural Networks (CNNs) provide excellent performance when used for image classification. The classical method of training CNNs is by labeling images in a supervised manner as in "input image belongs to this label" (Positive Learning; PL), which is a fast and accurate method if the labels are assigned correctly to all images.

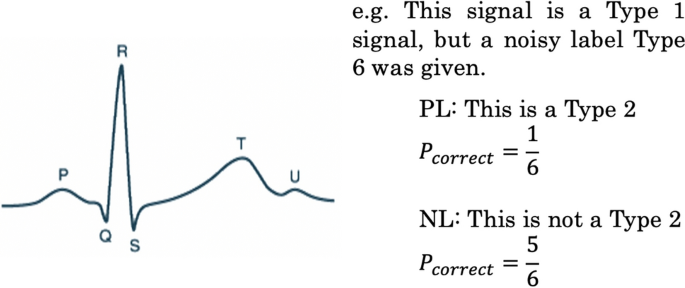

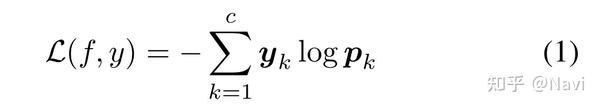

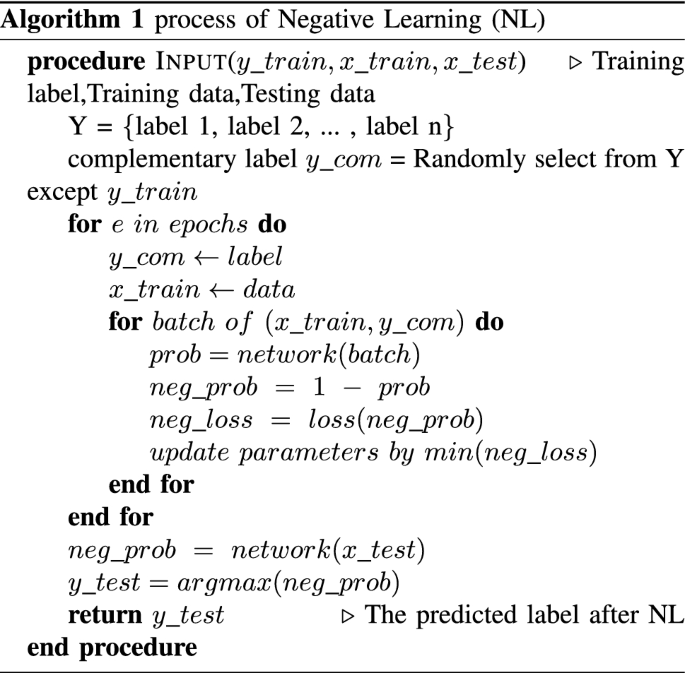

《NLNL: Negative Learning for Noisy Labels》论文解读 - 知乎 但在噪声情况下,PL会提供错误的信息,随着训练的进行会逐渐拟合噪声标签从而降低模型性能。. 因此,作者提出了NL (Negative Learning):"input image does not belong to this complementary label"。. Complementary label 的意思是当前训练图片不属于该标签。. 举个例子,一张狗的图片,它的正确标签是狗,噪声标签是汽车,而补标签是鸟(这张图片不属于鸟)。. 虽然补标签不是真正的标签,但 ...

NLNL: Negative Learning for Noisy Labels - IEEE Xplore Furthermore, to improve convergence, we extend our method by adopting PL selectively, termed as Selective Negative Learning and Positive Learning (SelNLPL). PL is used selectively to train upon expected-to-be-clean data, whose choices become possible as NL progresses, thus resulting in superior performance of filtering out noisy data. With simple semi-supervised training technique, our method achieves state-of-the-art accuracy for noisy data classification, proving the superiority of SelNLPL ...

NLNL: Negative Learning for Noisy Labels - arXiv Vanity Finally, semi-supervised learning is performed for noisy data classification, utilizing the filtering ability of SelNLPL (Section 3.5). 3.1 Negative Learning As mentioned in Section 1, typical method of training CNNs for image classification with given image data and the corresponding labels is PL.

ydkim1293/NLNL-Negative-Learning-for-Noisy-Labels - GitHub Pytorch implementation for paper NLNL: Negative Learning for Noisy Labels, ICCV 2019 Paper: Requirements python3 pytorch matplotlib Generating noisy data python3 noise_generator.py --noise_type val_split_symm_exc Start training Simply run sh file: run.sh

ICCV 2019 Open Access Repository However, if inaccurate labels, or noisy labels, exist, training with PL will provide wrong information, thus severely degrading performance. To address this issue, we start with an indirect learning method called Negative Learning (NL), in which the CNNs are trained using a complementary label as in "input image does not belong to this complementary label."

NLNL Negative Learning For Noisy Labels - Open Source Agenda NLNL-Negative-Learning-for-Noisy-Labels. Pytorch implementation for paper NLNL: Negative Learning for Noisy Labels, ICCV 2019. Paper: . Requirements. python3; pytorch; matplotlib; Generating noisy data python3 noise_generator.py --noise_type val_split_symm_exc Start training. Simply run sh file: run.sh

![2021 CVPR] Joint Negative and Positive Learning for Noisy ...](https://i.ytimg.com/vi/1oSExxg9txY/hqdefault.jpg)

![PDF] NLNL: Negative Learning for Noisy Labels | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab7539b938ca8a99b5fb34695e1b86ef0c6f3632/6-Table2-1.png)

![PDF] NLNL: Negative Learning for Noisy Labels | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab7539b938ca8a99b5fb34695e1b86ef0c6f3632/4-Figure3-1.png)

![PDF] NLNL: Negative Learning for Noisy Labels | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab7539b938ca8a99b5fb34695e1b86ef0c6f3632/3-Figure2-1.png)

![PDF] NLNL: Negative Learning for Noisy Labels | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab7539b938ca8a99b5fb34695e1b86ef0c6f3632/7-Table6-1.png)

![PDF] NLNL: Negative Learning for Noisy Labels | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ab7539b938ca8a99b5fb34695e1b86ef0c6f3632/5-Figure6-1.png)

Post a Comment for "44 nlnl negative learning for noisy labels"